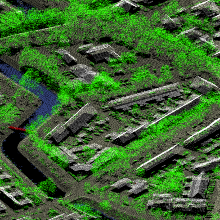

Semantic segmentation of point clouds is usually one of the main steps in automated processing of data from Airborne Laser Scanning (ALS). Established methods usually require expensive calculation of handcrafted, point-wise features. In contrast, Convolutional Neural Networks (CNNs) have been established as powerful classifiers, which at the same time also learn a set of optimal features by themselves. However, their application to ALS data is not trivial. Pure 3D CNNs require a lot of memory and computing time, therefore most approaches project point clouds into two-dimensional images. However, this leads to loss of information.

The challenge is to exploit the sparsity often inherent in 3D data. We investigate the efficient semantic segmentation of ALS voxel clouds in end-to-end encoder-decoder architectures.

The achieved overall accuracy on the ISPRS Vaihingen 3D benchmark is state-of-the-art. Rare object categories can still be identified reasonably well when trained with a weighted loss function, given their inner class variance is well represented in the training set. The implicit geometry of the point cloud has proven to be the primary feature. Object classes with similar geometries can be differentiated more easily by augmenting with native point features like echo intensity.

Larger amounts of ALS training data like the AHN3 dataset (www.pdok.nl) make training more stable and achieve better test results. However, these networks still requires a considerable amount of graphics memory, limiting resolution and sample extent.

References:

Schmohl, S. & Soergel, U. [2019]

ALS Klassifizierung mit Submanifold Sparse Convolutional Networks. Dreiländertagung der DGPF, der OVG und der SGPF in Wien, Österreich – Publikationen der DGPF, Band 28, 2019, pp. 111-122.

URL: https://www.dgpf.de/src/tagung/jt2019/proceedings/proceedings/papers/23_3LT2019_Schmohl_Soergel.pdf

Contact

Uwe Sörgel

Prof. Dr.-Ing.Director of the Institute