SLAM technology finds widespread application in fields such as robotics, autonomous driving, and augmented reality. Among various SLAM paradigms, visual SLAM has seen rapid development due to its low cost and the rich visual information it provides. Our research builds upon the concept of dense visual SLAM, aiming to fully exploit per-pixel visual information and dense correspondences induced by optical flow predicted by deep neural networks. This enhances the accuracy and robustness of device localization and enables dense reconstruction of the environment, in comparison with classical sparse landmark-based visual SLAM approaches.

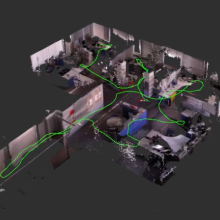

The focus of the project is on mobile robotics, where off-the-shelf wheel odometry information can be merged with camera data. Depth information captured by depth cameras, such as the Intel RealSense D435, is incorporated as depth priors for bundle adjustment, resulting in more accurate and complete depth maps. Leveraging the fusion of multiple registered color and depth images into a projective truncated signed distance function (TSDF) volume, we generate mesh representations of the environment, achieving accuracy within a few centimeters when compared to reference models obtained by laser scanners.

As more devices come equipped with IMUs for gravity direction estimation, and new benchmarks push the boundaries of SLAM methods, we explore the tightly-coupled integration of multi-camera inputs and complementary inertial measurements. This is achieved via a unified factor graph formulation, enabling joint optimization of poses and dense depth maps with information of all sensors. Fisheye cameras, with their larger field-of-view, play a pivotal role in our system. We directly operate on raw fisheye images, allowing us to fully exploit the wide Field-of-View (FoV) they offer and enabling the discovery of more potential loop closures. Our approach secured the Hilti SLAM Challenge 2022 cash award in the visual-only track.

To generate densely reconstruct environments using a single RGB camera, we adopt NeRF techniques for map representation. Employing multi-thread processing, a system for parallel tracking and loop closure-based global optimization is developed. Additionally, we train NeRF models in real-time using poses, depths estimation from bundle adjustment, and learned monocular geometric priors by off-the-shelf neural networks.

The final task is to create a future-proof product adaptable to various low-cost sensor setups, including single cameras, IMUs, wheel odometry, depth cameras, and multiple cameras. For this purpose we are aiming at unified frameworks for all setups, ensuring robust tracking using deep learning-based dense visual SLAM techniques and enabling real-time 3D dense environment modeling with accuracy comparable to Lidar sensors, while retaining richer visual information.

References

Zhang, W., Cheng, Q., Skuddis, D., Zeller, N., Cremers, D., & Haala, N. (2024). HI-SLAM2: Geometry-Aware Gaussian SLAM for Fast Monocular Scene Reconstruction. https://arxiv.org/abs/2411.17982

Zhang, W.; Sun, T.; Wang, S.; Cheng, Q. and Haala, N. [2024] HI-SLAM: Monocular Real-Time Dense Mapping With Hybrid Implicit Fields. in IEEE Robotics and Automation Letters, vol. 9, no. 2, pp. 1548-1555, Feb. 2024. URL: https://hi-slam.github.io/

Zhang, W.; Wang, S.; Dong, X.; Guo, R., & Haala, N. [2023] BAMF-SLAM: Bundle Adjusted Multi-Fisheye Visual-Inertial SLAM Using Recurrent Field Transforms. 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 6232-6238. URL: https://bamf-slam.github.io/

Zhang, W.; Wang, S. & Haala, N. [2022] Towards Robust Indoor Visual SLAM and Dense Reconstruction for Mobile Robots. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci., V-1-2022, 211–219, 2022. https://doi.org/10.5194/isprs-annals-V-1-2022-211-2022

Project Pages

Contact

Norbert Haala

apl. Prof. Dr.-Ing.Deputy Director

Wei Zhang

M.Sc.Research Associate