Christian Mayr

Conditional Infrared Image Synthesis for Semantic Segmentation

Duration: 6 months

Completition: April 2024

Supervisor: Dr. Michael Teutsch, Christian Kübler (beide Hensoldt)

Examiner: Prof. Dr.-Ing. Norbert Haala

Introduction

Semantic segmentation, a fundamental task in computer vision, involves assigning a class label to each pixel in an image based on its semantic meaning. Primarily used in automotive scenarios, it has applications in diverse fields such as remote sensing and medical imaging. Despite the effectiveness of modern deep learning methods, they rely heavily on extensive training data, which poses a challenge for fully supervised learning. As a result, the availability of large public datasets for semantic segmentation remains limited. Recent efforts aim to reduce annotation complexity through approaches such as procedural generation of synthetic data. However, bridging the domain gap between synthetic and real data remains a challenge.

Moreover, while semantic segmentation has been extensively studied in the visual spectrum (VIS) domain, its relevance in the infrared (IR) domain is underexplored. To address this gap, this thesis applies conditional image synthesis methods using the diffusion-based model ControlNet [8]. By transfer learning ControlNet [8] to the IR spectrum using the Full-Time Multi-Modality Benchmark (FMB) [3] dataset, a large number of synthetic IR images are generated. These synthetic images will be used to train a Transformer-based semantic segmentation algorithm - SegFormer [7]. The main contributions include proposing a method for synthesizing IR images based on ControlNet, training the Transformer-based semantic segmentation algorithm SegFormer on these synthesized data, and demonstrating through extensive experiments the effectiveness of the proposed approach in achieving near state-of-the-art (sota) performance in IR semantic image segmentation, with improved generalization capabilities compared to models trained only on real data.

Methodology

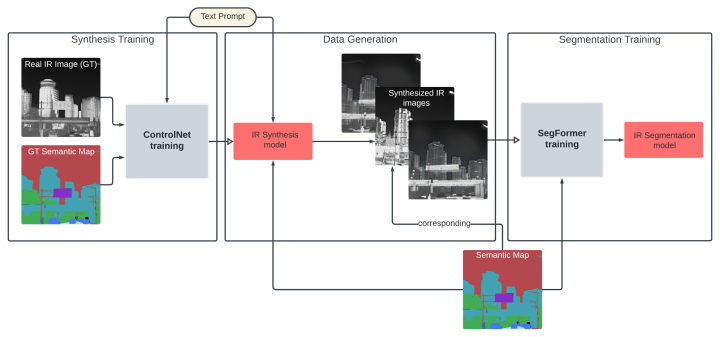

In order to achieve the set goal of improving the performance of semantic segmentation approaches working on IR data the pipeline shown in figure 1 is proposed. The pipeline and the employed methodology are divided into three principal blocks.

The initial phase consists of the training of the IR image synthesis model. For this task, ControlNet, an advanced image synthesis architecture, is adapted to synthesize IR images conditioned on semantic maps. By training ControlNet with Ground Truth (GT) IR images and semantic maps, high-quality IR images are synthesized while ensuring that the semantic composition of the resulting image is in line with the provided semantic map. The training data for this, is extracted out of the FMB [3] dataset. This method enables the creation of multiple IR images based on a single semantic map. While differently looking, those images maintain consistency in their semantic composition across all synthesized images due to the control exerted by the provided condition - the semantic map. This control is exerted by ControlNet [8] on its synthesis backbone Stable Diffusion (SD) [4].

The image synthesis training is followed by the generation of a large synthetic dataset using the previously trained IR image synthesis model. Leveraging the proposed approach, the size of the resulting synthetic dataset is significantly increased, in comparison to the dataset providing the input semantic maps - the FMB [3]. Generating ten IR images for each provided semantic map.

Finally, a semantic segmentation approach - SegFormer [7] - is trained and evaluated on the aforementioned synthesized dataset. SegFormer [7] has been demonstrated to be effective in IR image segmentation tasks in works including [3, 1].

Experiments & Results

In order to be able to effectively evaluate the benefits for semantic segmentation approaches that can be achieved by the proposed approach multiple segmentation models were trained and compared. For these two other methods that are capable of generating IR images were selected. These comparative methods are ASAPNet [5], a Generative Adversarial Network (GAN)-based image synthesis approach and so-called RGB2TIR [2] a GAN-based image translation approach. Like the proposed approach both comparative methods have been used to generate a fully synthetic dataset using data provided by the FMB [3] dataset.

These datasets alongside the FMB [3] dataset itself were used to train four different SegFormer [7] models for IR image-based semantic segmentation

Following Experiments have been conducted:

- A qualitative assessment of the IR image synthesis quality

- A quantitative as well as a qualitative evaluation of the trained segmentation models

- A generalization study - the segmentation models trained on FMB or FMB-like (the synthesized datasets) have been applied to an unseen dataset - the Freiburg Thermal [6] dataset

Qualitative assessment - IR Synthesis

Segmentation Performance

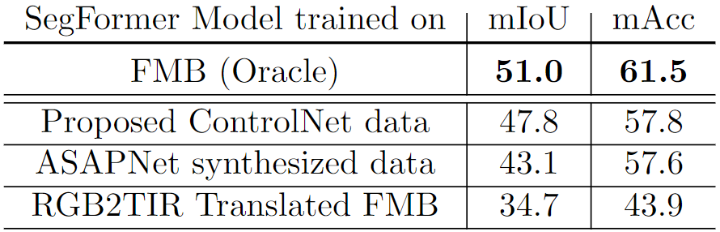

Above's table shows the evaluation results of the previously described SegFormer [7] models. As to be expected the model trained on the real FMB dataset, the so-called oracle, model performs best according to the mean Intersection over Union (mIoU) and mean Accuracy (mAcc) metrics shown in the table. Important to highlight is, that the SegFormer [7] trained on the data generated by the proposed pipeline performs best among those who utilize synthetic data.

This observation is confirmed by the qualitative evaluation shown in belows' figure. The segmentation maps of the GT, the oracle model and the model based on the data synthesized by the proposed pipeline look much alike. This, in addition to the quantitative evaluation, suggests that the SegFormer [7] trained on the proposed data achieves near sota performance.

The results of the generalization study shown in the table above reveal that using the proposed pipeline has great benefits for the generalization capabilities of semantic segmentation approaches, exemplary shown using the SegFormer [7] architecture. The model trained using the data generated by the method presented in this thesis has the highest scores among all models tested. To further visualize the results the figure below shows results of the segmentation models applied to the test set of the Freiburg Thermal [6] dataset.

Conclusion & Outlook

In this work, a pipeline for the synthesis arbitrarily amounts of high-quality IR images corresponding to existing semantic annotations has successfully been presented. Using the proposed approach can help to minimize the existing research gap of too few publicly available datasets in the IR domain. therefore, this work can contribute to the research and development of sota methods of image processing and especially semantic segmentation based on IR images. Furthermore, the effectiveness and benefits of the pipeline for IR image-based semantic segmentation approaches have been proven by extensive experiments.

Future research could focus on evaluating the influence of the use of 'foreign' semantic maps as input for the image synthesis. Semantic maps are described as foreign, if they originate out of a dataset on which the synthesis model has not been trained. For example, a semantic map out of the Freiburg Thermal [6] would be titled as 'foreign' if it would be used as input to synthesize images using a ControlNet [8] model trained on the FMB [9] dataset. Other possibilities include a more detailed hyperparamter-tuning for ControlNet [8] as well as for the used SegFormer [7] models as well as experiments towards the closure of the synthetic-to-real domain-gap. Such experiments could try to use little amounts of real data to fine-tune a synthesis model that has been pre-trained on a synthesized model.

Bibliography

[1] Zülfiye Kütük and Görkem Algan. “Semantic Segmentation for Thermal Images: A Comparative Survey”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops. June 2022, pp. 286–295. url: https://openaccess.thecvf.com/content/CVPR2022W/PBVS/html/Kutuk_Semantic_Segmentation_for_Thermal_Images_A_Comparative_Survey_CVPRW_2022_paper.html

[2] Dong–Guw Lee et al. “Edge-guided multi-domain rgb-to-tir image translation for training vision tasks with challenging labels”. In: 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE. 2023, pp. 8291–8298. url: https://ieeexplore.ieee.org/abstract/document/10161210/;

https://github.com/RPM-Robotics-Lab/sRGB-TIR

[3] Jinyuan Liu et al. “Multi-interactive Feature Learning and a Full-time Multi-modality Benchmark for Image Fusion and Segmentation”. In: International Conference on Computer Vision. 2023. url: https://arxiv.org/abs/2308.02097

[4] Robin Rombach et al. “High-Resolution Image Synthesis with Latent Diffusion Models”. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2022. url: https://arxiv.org/abs/2112.10752, https://github.com/CompVis/latent-diffusion.

[5] Tamar Rott Shaham et al. “Spatially-adaptive pixelwise networks for fast image translation”. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021, pp. 14882–14891. url: https://tamarott.github.io/ASAPNet_web/

[6] Johan Vertens, Jannik Z ̈urn, and Wolfram Burgard. “HeatNet: Bridging the Day-Night Domain Gap in Semantic Segmentation with Thermal Images”. In: arXiv preprint arXiv:2003.04645 (2020). url: http://thermal.cs.uni-freiburg.de/

[7] Enze Xie et al. “SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers”. In: Neural Information Processing Systems (NeurIPS). 2021. url: https://github.com/NVlabs/SegFormer

[8] Lvmin Zhang, Anyi Rao, and Maneesh Agrawala. “Adding Conditional Control to Text-to-Image Diffusion Models”. Feb. 2023. url: https://github.com/lllyasviel/ControlNet

[9] Jinyuan Liu et al. “Multi-interactive Feature Learning and a Full-time Multi-modality Benchmark for Image Fusion and Segmentation”. In: International Conference on Computer Vision. 2023. url: https://arxiv.org/abs/2308.02097.

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter