Nadine Sprügel

Development of Feature Detection Based on Semantic Segmentation for Visual Odometry in Agricultural Environments

Duration: 6 months

Completition: November 2023

Supervisor: M.Sc. Dominik Moss (Fraunhofer-Institut für Produktionstechnik und Automatisierung (IPA))

Examiner: Prof. Dr.-Ing. Norbert Haala

Introduction

Sustainable and ecological agriculture requires precise and reliable positioning of the agricultural robot CURT, which stands for Crops Under Regular Treatment (Roehricht, 2022). Currently, localization techniques based on GNSS and wheel odometry are prone to errors, which results in inaccuracies. Therefore, the precise positioning of CURT should be determined using visual odometry. A recent review by Cremona et al. (2022) evaluated 11 visual odometry algorithms. None of these achieved the required accuracy due to challenges of the agricultural environment like repetitive and similar scenes and movements of plants due to wind. Therefore, a new feature type for the agricultural environment was developed.

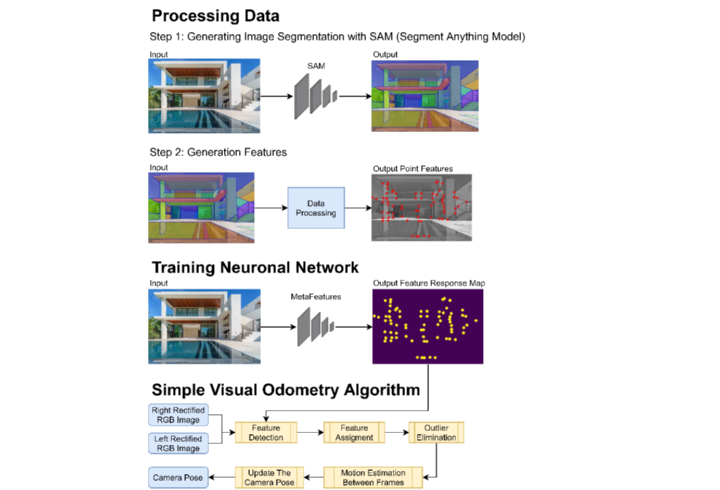

Development of the MetaFeatures

The agricultural images were semantically segmented using the non-real-time Segment Anything Model (SAM, Kirillov et al., 2023). From this segmentation, features were obtained, corresponding to the contour of the semantic segmentation masks and their intersections, called features AC. Additionally, features were acquired, representing the central points of the semantic segmentation masks, called features B. These features AC and B were then detected in the image using a neural network for each feature type and are now referred to as MetaFeatures AC and B. In addition, two simple visual odometry algorithms were developed without local and global optimization. One algorithm used the MetaFeatures AC and the DAISY descriptor, while the other algorithm used the ORB features and descriptors.

Evaluation

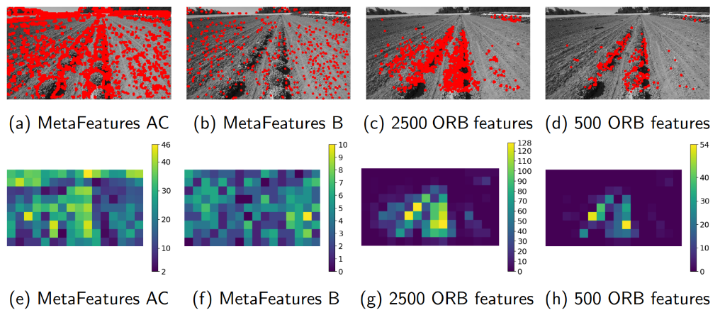

To evaluate the distribution of MetaFeatures, we divided the images into 144 cells with a size of 40 x 40 pixels and counted the number of features in each cell. The number of cells without features was for the MetaFeatures AC generally in single digits, whereas it was for the 500 ORB features generally over 70.

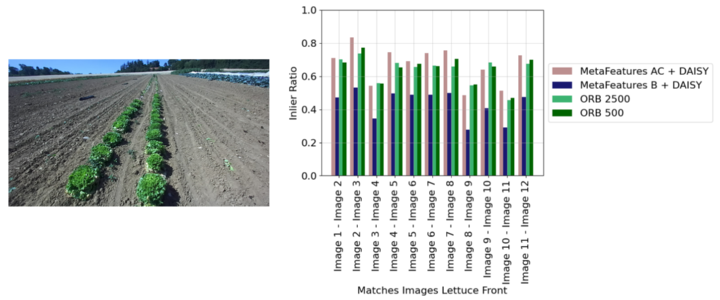

To assess the quality and stability of MetaFeatures, the ratio of matches used to determine the essential matrix (see Hartley & Zisserman, (2018)) to all matches was used for consecutive images (quality) and for images with different time intervals (stability).

To determine the trajectory's accuracy of our simple visual odometry algorithms, we calculated the absolute position error (APE, Sturm et al., 2012), the absolute trajectory error (ATE), the relative position error (APE), and the relative trajectory error (RPE). As the reference trajectory, the ground-truth trajectory was used if it was available. If not the ORB-SLAM3 trajectory (Campos et al., 2021) trajectory was used.

Discussion and Conclusion

The evaluation indicates that mostly the entire image contains MetaFeatures AC and B compared to the classical ORB features. Therefore, also many MetaFeatures were present in the soil area.

Moreover, evaluating the features' quality and stability shows that the MetaFeatures AC demonstrate similar quality as the ORB features and outperformed the ORB features in terms of stability. However, the MetaFeatures B exhibit inferior quality compared to the ORB features. The lower quality is due to the robot's movement altering the plants' form in the captured images, leading to changes in the mask shapes. The impact of these changes was stronger for MetaFeatures B because they correspond to the center pixels of the mask. Not all MetaFeatures AC were affected by the change of the mask’s shape because the changes did not affect the entire contour.

The evaluation of our simple visual odometry algorithm showed that using MetaFeatures AC instead of ORB features led to better accuracy of the determined pose between two images with a larger time spacing. Furthermore, the evaluation of the absolute trajectory error (ATE) of our simple visual odometry trajectories suggests, that local optimization is needed to keep up with the latest visual odometry algorithms. Additionally, the MetaFeatures AC should replace the classical features of current state-of-the-art visual odometry.

Bibliography (selection)

Campos, C., Elvira, R., Rodriguez, J. J. G., M. Montiel, J. M., & D. Tardos, J. (2021). ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Transactions on Robotics, 37(6), 1874–1890. https://doi.org/10.1109/TRO.2021.3075644

Cremona, J., Comelli, R., & Pire, T. (2022). Experimental evaluation of Visual‐Inertial Odometry systems for arable farming. Journal of Field Robotics, 39(7), 1121–1135. https://doi.org/10.1002/rob.22099

Hartley, R., & Zisserman, A. (2018). Multiple view geometry in computer vision (2. edition, 17.printing). Cambridge Univ. Press.

Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C., Gustafson, L., Xiao, T., Whitehead, S., Berg, A. C., Lo Wan-Yen, Dollár, P., & Girshick, R. (2023, April 5). Segment Anything. http://arxiv.org/pdf/2304.02643v1

Roehricht, K. (2022, November 15). Landwirtschaft der Zukunft: Roboter CURT beackert die Felder (Teil 2). Fraunhofer-Institut Für Produktionstechnik Und Automatisierung IPA. https://www.biointelligenz.de/biointelligente-produktion/%5C-landwirtschaft-der-zukunft-roboter-curt-beackert-die-felder-teil-2/

Sturm, J., Engelhard, N., Endres, F., Burgard, W., & Cremers, D. (2012). A benchmark for the evaluation of RGB-D SLAM systems. In Ieee/rsj International Conference on Intelligent Robots and Systems (IROS), 2012: 7 - 12 Oct. 2012, Vilamoura, Algarve, Portugal (pp. 573–580). IEEE. https://doi.org/10.1109/IROS.2012.6385773

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter