Henrik Müller (M.Sc. LRT)

Investigation and Implementation of Fisheye Camera Models into Droid-SLAM

Duration: 6 months

Completition: July 2023

Supervisor: : M.Sc. Wei Zhang

Examiner: Prof. Dr.-Ing. Norbert Haala

Introduction

Fisheye cameras are widely used for visual SLAM systems because of their wide-angled Field-of-Field (FoV). Dedicated fisheye camera models like the Kannala-Brandt [1] or the Double Sphere camera model [2] accurately describe, how objects are projected on the camera sensor, making the processing more complex compared to images that correspond to the rectilinear camera model. Therefore many frameworks, including Droid-SLAM [3], require to remap the images in advance to the perspective (pinhole) camera model, often referred to as undistortion, technically being a remapping between camera models. Unfortunately, this remapping leads to a significant loss of FoV and information density within the image and thereby potentially restricts the accuracy of the photogrammetric process.

The aim of this thesis was to extend Droid-SLAM to first process pinhole remapped images with increased FoV and second implement two dedicated fisheye camera models to process the original fisheye images without the need of pre-remapping. Finally, the frameworks ability to optimise the intrinsic camera parameters in the joint optimisation process was investigated to test if further improvement in the accuracy of the camera trajectory

can be achieved.

Methods

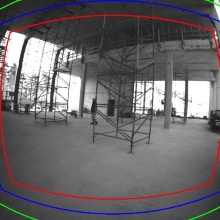

To increase the FoV of the pinhole image in the remapping process from fisheye images, the focal length was adjusted. This allows to include more information in the resulting image. Fig. 1 shows the red frame of the remapped pinhole image in standard implementation reprojected onto the original image. The blue and green frames mark the boundaries of remapped images with decreased focal lengths. By investigating the camera models and images at various FoVs, it has been demonstrated that the distribution of information density in the original fisheye image is superior to that of the images obtained after remapping to pinhole. To leverage this advantage, two dedicated fisheye camera models were implemented into Droid-SLAM to directly process the native images. The FoV for these images was then adjusted by cropping.

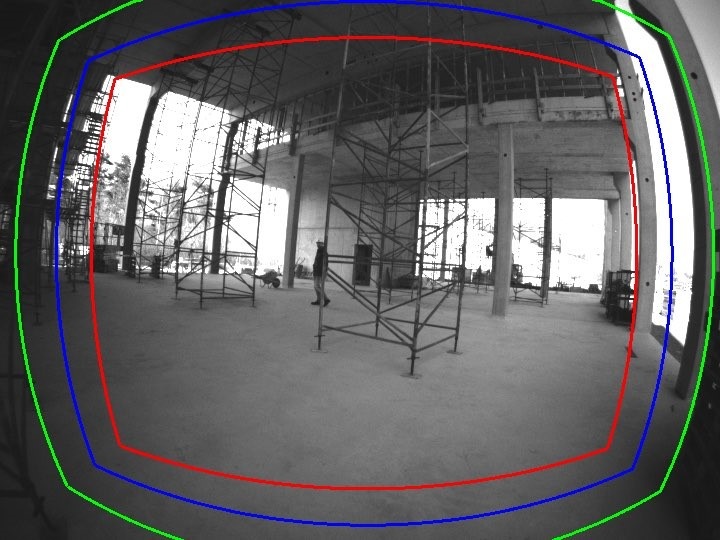

To perform the online update (calibration) of the intrinsic parameters, they were implemented in the bundle adjustment layer of Droid-SLAM. Fig. 2 shows the visualised optical flow, which serves as the basis for the bundle adjustment in Droid-SLAM. This visualisation was implemented in the Framework the better understand the result and functionality of the implemented methods.

Results

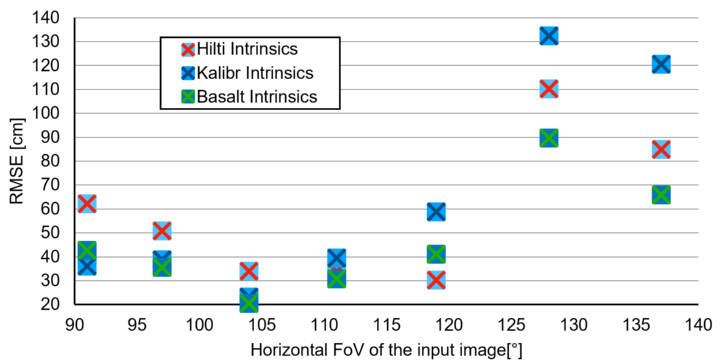

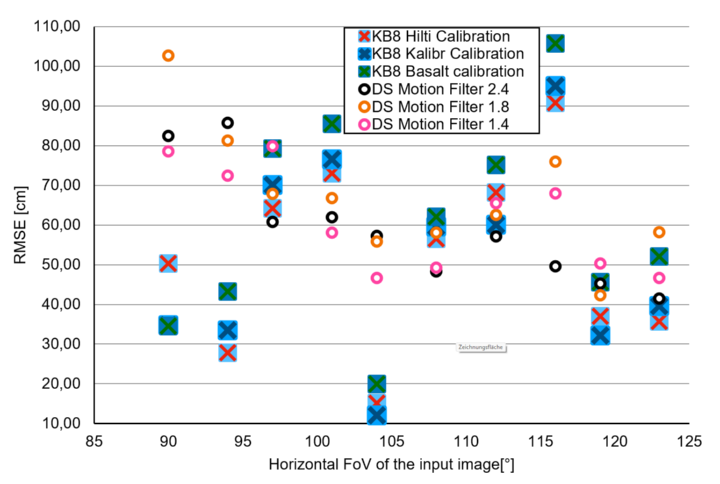

To test the implemented methods, the Hilti-SLAM Challenge Dataset 2022 [4] was used. It provides ground truth trajectories for all sequences to evaluate the accuracy of the estimated trajectories from Droid-SLAM. The results for the sequence “Construction Ground Level” can be seen in Fig. 3 for the remapped pinhole images and in Fig. 4 for the implemented fisheye camera models. For the Kannala-Brandt model furthermore 3 different calibrations were tested. The plots show the RMSE of the estimated trajectories compared to the ground truth.

The entry on the left is the standard implementation with the closest FoV. For this sequence a FoV of 104° has the best results with a decrease of the RMSE by -66%. This correlates to the results from the experiments with the original fisheye image. For both methods the accuracy could be improved, compared to the standard implementation. To this point all tests were conducted with the standard motion filter threshold of 2.4. Fig. 4 additionally shows the results for the Double Sphere model with different motion filter threshold, resulting in more keyframes for lower filter values. Here, no correlation to the accuracy can be determined.

The implemented online calibration approach could not improve the accuracy hence improve the quality of the intrinsic parameter sets. This might be due to the high influence of numerical effect that might also cause the alternating performance between different FoV steps and also between the different parameter sets, resulting in different parameters performing best depending on the FoV and also sequence.

Conclusion

Droid-SLAM is a state-of-the-art framework with outstanding performance, making further improvement very challenging. However, the two implemented approaches to variate the FoV produce results that exceeds the accuracy of the original framework on the tested sequences significantly, confirming the made assumptions about the potential of wide-angled images and the additional information they contain.

These results can also be applied to other projects and frameworks and might help to decide, if the adjustment of the FoV in the remapping process is sufficient to profit from the simpler implementation of the pinhole camera model or if a dedicated fisheye model should be implemented to process images with the maximum available information density.

Bibliography

[1] J. Kannala and S. S. Brandt, "A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 28, no. 8, pp. 1335-1340, Aug. 2006, doi: 10.1109/TPAMI.2006.153.

[2] V. Usenko, N. Demmel and D. Cremers, "The Double Sphere Camera Model," 2018 International Conference on 3D Vision (3DV), Verona, Italy, 2018, pp. 552-560, doi: 10.1109/3DV.2018.00069.

[3] Z. Teed and J. Deng, “DROID-SLAM : Deep Visual SLAM for Monocular, Stereo, and RGB-D Cameras”, in Advances in Neural Information Processing Systems 34 - 35th Conference on Neural Information Processing Systems, NeurIPS, 2021

[4] L. Zhang, M. Helmberger, L. F. T. Fu, D. Wisth, M. Camurri, D. Scaramuzza, M. Fallon, “Hilti-Oxford Dataset: A Millimeter-Accurate Benchmark for Simultaneous Localization and Mapping” in IEEE Robotics and Automation Letters, vol. 8, no. 4, pp.408-415, 2023, doi:10.1109/LRA.2022.3226077

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter