Hakam Shams

Semantic Mesh Segmentation using PointNet++

Duration of the Thesis: 6 months

Completion: December 2019

Supervisor: MSc Dominik Laupheimer, Prof. Dr.-Ing. Norbert Haala

Prüfer: Prof. Dr.-Ing. Norbert Haala

Abstract

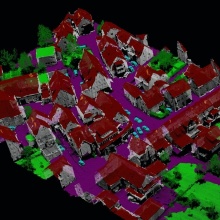

Research works on 3D data are common in photogrammetry and remote sensing. In contrast to point clouds, meshes hold high-resolution textural and geometric features with less amount of data. However, only few research works deal with semantic mesh segmentation in a large-scale dataset. The main aim of this thesis is to analyze and evaluate semantic segmentation of meshes in an outdoor urban scenario and to make use of PointNet++, which is a point-based Deep Learning network applicable to meshes. We implemented a pipeline to segment meshes semantically including pre-processing, training and evaluation. Additionally, we propose a novel way to incorporate LiDAR point cloud with mesh. The used dataset is a 2.5 D textured mesh (Cramer et al., 2018) generated from an integrated system of airborne oblique imagery and laser scanning (LiDAR).

Methodology

In contrast to image space, 3D data is inherently unstructured data. Thus, image-based network cannot be applied to 3D data directly but require transfer of information and adaptation of CNN. To operate directly on meshes, a point-based hierarchical feature learning (PointNet++) is used (cf. Figure 1). First, the input mesh is represented as a point cloud, where each point refers to the center of gravity (COG) of a face. Thus, by calculating a feature vector for each face, we basically reduce the problem of semantic mesh segmentation to a semantic point cloud segmentation. The training data is split into spatially overlapped tiles with a fixed dimensionality. Then batches are generated from these overlapping tiles by kNN sampling.

To incorporate LiDAR features, we implemented a tool that parses wavefront OBJ file with its corresponding LiDAR point cloud and attaches LiDAR features to each face. This is based on Ball-tree and barycentric coordinates with adaptive thresholds (cf. Figure 2). Thus, the main feature vector consists of geometric, radiometric and LiDAR features. For testing, we split the test tiles into overlapping batches and store the predicted label and probability per face in each batch. Then we merge the predictions by averaging the probability densities over all batches. The final predicted label per face is then defined based on the maximum of averaged probability densities (cf. Figure 4).

Results and Conclusions

Benefiting from the incorporation of LiDAR features, we map information from mesh to its associated LiDAR points. Thus, we achieve a direct labeling of the point cloud (cf. Figure 6). Similarly, we obtain predicted labels of the point cloud based on the predicted labels of the mesh.

In this thesis we showed the capability of PointNet++ for semantic mesh segmentation. The achieved result of this approach was close to 86% in term of OA coming along with 89% of correctly predicted surface area. As it shown through results, in some area the classifier has problem to clearly separate green space from impervious surface. We observed that adding better color information improved the result. In this context, the best used color information was median HSV per face. Moreover, we incorporated LiDAR point cloud with mesh and investigated different feature vector compositions. The best feature vector consisted of all geometric, radiometric and LiDAR features (number of returns, reflectance). Regarding LiDAR features, on one hand reflectivity was mainly helpful to detect more vehicles and slightly improved the predictions of the majority of classes. On the other hand, number of returns improved the precision of mid and high vegetation more. Limitations are still existing due to the geometrical representation of 2.5D mesh. Therefore, replacing 2.5D mesh with real 3D mesh will certainly improve the results. However, even with 2.5D mesh label transformation between mesh and LiDAR point cloud showed encouraging results. This could be extended to other 3D representations and allow the implementation of fused model using both mesh and point cloud.

References

- Cramer, M., Haala, N., Laupheimer, D., Mandlburger, G. & Havel, P., 2018: Ultra-High Precision UAV-Based LiDAR and Dense Image Matching. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XLII-1,115- 120.

- Qi, C., Yi, L., Su, H. & Guibas, L J., 2017: PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. Proceedings of the 31st International Conference on Neural Information Processing Systems, 5105-5114.

- Tutzauer, P., Laupheimer, D. & Haala, N., 2019: Semantic Urban Mesh Enhancement Utilizing a Hybrid Model. ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, IV-2/W7, 175-182.

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter