Yujing Chen

Road Inventory Mapping with Street-Level Imagery from iPhone

A Combination of Structure from Motion and Deep Learning

Duration of the Thesis: 6 months

Completion: July 2019

Supervisor: Dr.-Ing. Michael Cramer, Achim Hoth (corparate partner)

Examiner: Prof. Dr.-Ing. Norbert Haala

Abstract

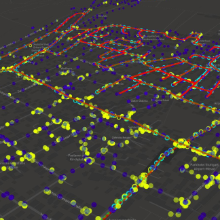

We present a system that uses the latest object detection methods and structure from motion to 3D reconstruct and analyse street-view image sequences taken by mobile phones. An object detection model was trained and deployed using Tensorflow, to detect traffic signs on images. A 3D reconstruction of the scene is produced by key points matching between images. Within the reconstructed 3D scene, the object detection on the images can be transferred into the 3D space or vice versa. A top-view of the street surface is generated, by transforming and stitching the street-view image. Our system built the connection between images and 3D space, enabling transferring pixel coordinates to world coordinates, locating objects in 3D space, and measuring the real world dimension of objects on images. This system is used to empower automatic road condition surveillance for our corporate partner.

Key words: Computer Vision, Machine Learning, Structure from Motion, Convolutional Neural Networks.

In the instance segmentation, a bounding box and corresponding class and confidence is attached to each object as object detection, additionally, a mask shows which pixels belongs to the object is computed for each bounding box. The binary masks are used later for road surface stitching.

Conclusion

We developed and discussed algorithm associated with a low-cost mobile mapping systems using mobile phones alone. Deep learning using convolutional neural networks was used to detect objects from the images, followed by structure from motion to rebuild the 3D scene, to locate and map the objects, and to generate road surface stitching. The data from our corporate partner was closely examined, and improvement and potentials are proposed and tested. This project also indicates the great potential of portable and low cost mobile mapping systems using mobile phone associated with road surface and inventory.

Ansprechpartner

Michael Cramer

Dr.-Ing.Gruppenleiter Photogrammetrische Systeme