Fangwen Shu

Implicit Joint Semantic Segmentation of Images and Point Cloud

Duration of the Thesis: 6 months

Completion: April 2019

Supervisor: M.Sc. Dominik Laupheimer

Examiner: Prof. Dr.-Ing. Norbert Haala

Introduction

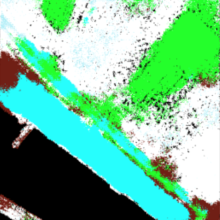

In order to avoid feeding noisy or non-uniformly sampled point cloud into 3D CNN, in this work, a novel fusion of the labeled LiDAR point cloud and oriented aerial imagery in 2D space is hypothesized, in this way, we can leverage image-based semantic segmentation and create a multi-view, multi-modal and multi-scale segmentation classifier. Thereafter through a fast back-projection of the 2D semantic result to 3D point cloud, we achieve a joint semantic segmentation of imagery and point cloud. The proposed method is validated against to our own dataset: the oriented high-resolution oblique and nadir aerial imagery of village Hessigheim, Germany, captured by unmanned aerial vehicle (UAV), as well as the LiDAR point cloud obtained by the airborne laser scanning (ALS) device. The high resolution aerial images offer views of diverse urban scene, with useful geometric characteristics derived from point cloud, it is a potential combination to set up a big dataset for training a well-engineered deep CNN.

Dataset

In this work, a large-scale ALS point cloud covering the village Hessigheim, Germany, as well as the nadir and oblique imagery which are captured by UAV (Cramer et al., 2018). It is worth to note that LiDAR point cloud is already manually labeled (master thesis from Michael Kölle), while aerial imagery is not labelled initially.

Methodology to Establish Connection Between 3D Point Cloud and 2D Imagery

Training

Results

References

Audebert, N., Le Saux, B., and Lefèvre, S. (2018). Beyond rgb: Very high resolution urban remote sensing with multimodal deep networks. ISPRS Journal of Photogrammetry and Remote Sensing, 140:20–32.

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017). Segnet: A deep convolutional encoderdecoder architecture for image segmentation. IEEE transactions on pattern analysis and machine intelligence, 39(12):2481–2495.

Chen, L.-C., Papandreou, G., Schroff, F., and Adam, H. (2017). Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587.

Cramer, M., Haala, N., Laupheimer, D., Mandlburger, G., and Havel, P. (2018). Ultrahigh precision uav-based lidar and dense image matching. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences.

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter