Mariia Kokhova

Learning Super-resolved Depth from Multiple Overlapping Gated Images with Neural Networks

Duration of the Thesis: 6 months

Completion: June 2018

Supervisor: M. Sc. Tobias Gruber (Daimler AG)

Supervisor & Examiner: Prof. Dr.-Ing. Norbert Haala

Introduction

Three dimensinal perception of the environment is a crucial task for autonomous driving. Depth reconstruction from multiple two dimensional gated images is the object of research in this thesis. All experiments were done based on the example of the BrightWay Vision (BWV) gated system. There are 3 different methods described in the literature for range estimation from gated images: time slicing, range-intensity correlation and gain modulation method. In this work, neural networks were used for 3D scene reconstruction from gated images.

Gated Imaging

A gated camera is an active imaging system that uses a time synchronized light source for illumination, in case of the BWV system a near infrared (NIR) light source. This feature provides the gated system the ability to capture scenes in a selected distance range (they will be called slices further), as it is shown in Figure 1. This ability is defined by the synchronization of the illumination laser source and gated camera.

Gated depth estimation

The basic principle of the NNs is that an algorithm is trained, in contrast to be programmed in a traditional sense. By using a huge amount of training data, NNs can estimate input-output mapping that generalizes even for unseen inputs. The main idea of our algorithm is to collect the big amount of data and learn the function between the gated images intensity values and range with a NN. Data for training the NN were recorded in Germany (Hamburg) and Sweden (Kopenhagen) by a test vehicle equipped with two light detecting and ranging (LIDAR) systems (Velodyne HDL64 S3 and Velodyne VLP32C) and a gated system from BWV. The NN for depth estimation was trained with intensities of three slices as input and distance from the reference measurement system as output, see Figure 2. Intrinsic and extrinsic calibration was performed in order to project LIDAR points into the rectified gated image.

Before training, the filtering and normalization of the dataset was performed. Four different filtering algorithms were proposed.

As there was not yet a standard network for our task, an appropriate network design had to be found. Therefore, grid search over different network and batch sizes, activation functions and learning rates was performed. Finally, a simple NN with a single hidden layer with 40 nodes was selected as appropriate for our task.

Evaluations of the results

The performance of our method was evaluated on a test dataset that was collected separately, using a static scene with five targets with different reflectances, see Figure 3

A relative accuracy of 5 % was achieved by our method on the range between 14 m and 85 m, see Figure 5. Furthermore, the independence of our method from the target reflectance, reference measurement system and location of the object in the field of view of the camera was proved.

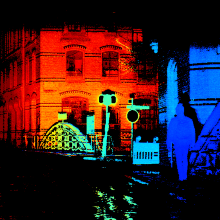

In order to show the performance of our method, the depth map for a scene, that have not been seen before, was reconstructed from our gated system with the pre-trained NN (Figure 5). For reference, the depth map from stereo camera calculated by SGM is shown in Figure 6.

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter