Stefan Schmohl

Study on Noise Robustness of 3D Shape Recognition with Convolutional Neural Networks

Duration of the Thesis: 6 months

Completion: October 2017

Supervisor & Examiner: Prof. Dr.-Ing. Uwe Sörgel

Introduction

Recent work has shown that 3D convolutional neural networks are suitable for recognizing three dimensional shapes with human-like performance. This was possible by advancements in the field of neural networks and by the availability of sufficient large 3D datasets like ModelNet. Different network architectures are commonly benchmarked on clean CAD models or good quality scanned point clouds, but no systematic analysis of robustness towards noise-like distortions has been done yet. In this thesis, it was studied how 3D convolutional neural networks react under noised input when applied to 3D shape classification, and what effects common generalization methods have towards noise invariance.

Methodology

In order to do so, two algorithms were introduced which inject noise into binary occupancy voxel grids. This noise, concentrating its influence on the objects surfaces or spreading over the whole grid in the form of clumps, are supposed to simulate perturbations which can be expected from real world measurements. This noise was applied to voxelated ModelNet CAD shapes, both for testing purposes and for network training.

Multiple configurations of a baseline network architecture were tested in order to improve its noise robustness. Different sorts of data augmentation as well as regularization techniques like dropout and weight decay were used for training.

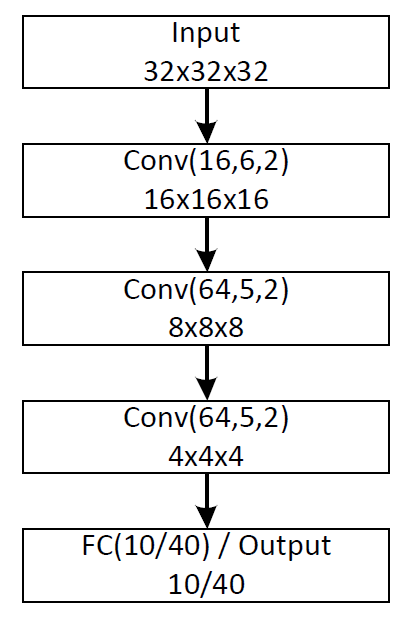

Figure 2: Baseline network architecture used in this thesis. Conv(k,f,s) indicates k filters of size f at stride s. Additionally to the baseline architecture, two slightly altered networks were analyzed. The first one uses max-pooling layers instead of striding convolution, the second one has a dropout layer before the output layer.

Results

Augmenting the training data with flips and translations of the objects inside the voxel grid improve invariance towards such perturbations, but worsen noise robustness. Generic techniques like weight decay however shows general improvements and lead to cleaner convolutional filters.

The ConvNets have more problems with voxels changing from occupied to free space, than vice versa. Noise on the objects surfaces however preserves the general object shape and therefore its effect on the object voxels is more tolerable (see Figure 3).

Applying dropout and max-pooling on their own show a decrease in accuracy. Training with noised data strongly increase classification accuracy for likewise or stronger noise, but it leads to under fitting at lower noise levels (Figure 4). This can be prevented by using an ensemble of networks trained on different noise levels or training a single network alike. If data augmentation is applied, max-pooling and dropout can also rise the underfitting dent. However, because of more convolutions that are to be computed, max-pooling takes 2-5 longer to train than networks with dropout, which have no significant training slow down.

Conclusion

The results show that convolutional neural networks are generally not that ordinarily fooled by noise-like artifacts outside the shapes, but prone to jitter near object surfaces. This thesis demonstrates the importance of correct data augmentation regarding robustness towards a specific perturbation. Weight decay should generally be applied, but dropout has ambiguous effects. However dropout proved to be an efficient tool for substituting expensive downsampling by pooling with striding convolution.

References (selection)

[Brock et al. 2016] Brock, André; Lim, Theodore ; Ritchie, James M. ; Weston, Nick: Generative and Discriminative Voxel Modeling with Convolutional Neural Networks. In: CoRR abs/1608.04236 (2016). http://arxiv.org/abs/1608.04236

[Goodfellow et al. 2016] Goodfellow, Ian; Bengio, Yoshua ; Courville, Aaron: Deep Learning. MIT Press, 2016. – http://www.deeplearningbook.org

[Maturana and Scherer 2015] Maturana, Daniel; Scherer, Sebastian: VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In: 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) IEEE, 2015, S. 922–928.

[Nguyen et al. 2015] Nguyen, Anh; Yosinski, Jason ; Clune, Jeff: Deep Neural Networks are Easily Fooled: High Confidence Predictions for Unrecognizable Images. In: 2015 IEEE Computer Vision and Pattern Recognition CVPR IEEE, 2015, S. 427–436.

[Wu et al. 2015] Wu, Z. ; Song, S.; Khosla, A. ; Yu, F. ; Zhang, L. ; X. Tang, J. X.: 3D ShapeNets: A Deep Representation for Volumetric Shapes. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE, 2015. – ISSN 1063–6919, S. 1912–1920.

[Xu and Todorovic 2016] Xu, Xu; Todorovic, Sinisa: Beam Search for Learning a Deep Convolutional Neural Network of 3D Shapes. In: 23rd International Conference on Pattern Recognition (ICPR), 2016, S. 3506–3511.

Ansprechpartner

Uwe Sörgel

Prof. Dr.-Ing.Institutsleiter, Fachstudienberater