Minwei Tang

Out-of-core algorithms for large point clouds in photogrammetric applications

Duration of the thesis: 6 months

Completition: May 2014

Tutor: Dipl.-Ing. Konrad Wenzel

Examiner: Prof. Dr.-Ing. Dieter Fritsch

Introduction

Nowadays large point clouds are frequently used in photogrammetric applications, and the hardware limits of an end user workstation are seriously challenged by their ever increasing size. The storage required for a single point cloud can easily exceed the main memory size, thus it is impossible to process such point cloud at one time.

In this work the goal is to investigate the feasibility of applying the so called out-of-core algorithm to photogrammetric large point clouds. An open source out-of-core implementation by PCL (Point Cloud Library) has been tested with large point clouds data, and based on the test results several methods for improvements are discussed in order to accommodate photogrammetric features and tasks.

Out-of-core method

The out-of-core algorithm is designed to process large data that cannot fit into main memory. It utilizes external storage devices, usually hard disks to hold the full data set and fetches a small part of the data set into main memory as it is processed. Due to the slow data processing of the external storage devices, an out-of-core algorithm must convert the linear structured data set into an access-efficient tree structure to match the much quicker access of main memory. Additionally, utilizing the out-of-core algorithm for large point cloud must fit for the tasks of photogrammetric applications. Point based search operations such as nearest neighbors search are frequently used in photogrammetry. Furthermore, it is expected that the data set would be often updated during typical photogrammetric processes like points insertion, deletion and data filtering. In order to meet all the demands, it is essential to come up with an appropriate data structure that can provide high processing efficiency and flexibility.

The PCL has adopted the octree structure for the data management of point cloud on disk. In comparison to other data-driven structures such as kd-tree, the space-driven octree ensures that the capability of data update is not compromised, whilst the efficiency of search algorithms has large potential to be improved so it is not distinctly slower than that of data-driven structures.

Test and evaluation

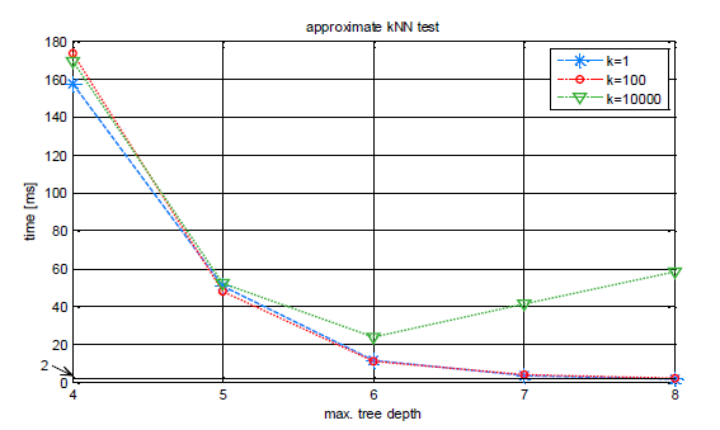

The center of this thesis is to test and evaluate the out-of-core algorithms for photogrammetric applications. One of the most frequently used functions in photogrammetric application is the k Nearest Neighbor (kNN) search, which enables many other operations such as point cloud resampling. We have tested the approximate kNN search with PCL over a point cloud of 110 million points. The shortest elapsed time for a single search point is 2ms when the tree has 8 levels of depth with k=1.

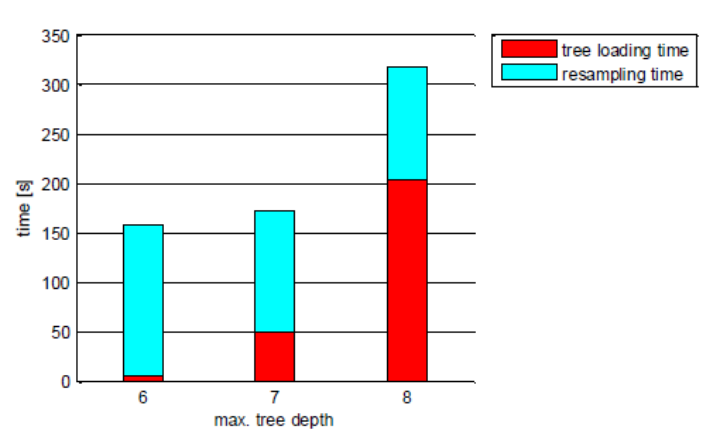

In the meantime, the resampling test (with 10% points resampled) is run with a point cloud of about 12 million points. Although the point cloud size is reduced, the resampling can process merely 1900 points per second at most. It is unacceptable when applying it for point clouds of huge size, since it could take half a day to finish 10% resampling over a large point cloud with 10 million points. Should the PCL module be applied in photogrammetric use of large point clouds, it must be optimized and redesigned to obtain much quicker search performance.

There are a number of aspects that can be considered to improve the PCL module. First of all its fixed depth should be made more flexible. Other than that, if PCL can exploit the regular space-partitioning method of octree more, the tree query performance can be greatly boosted. In addition, other data structures have certain superiority over octree, and if they are properly mixed with octree, some of the negative impacts caused by octree can be mitigated up until fully avoided.

Conclusion

The test and evaluation of PCL suggests that in order to achieve an efficient out-of-core implementation for photogrammetric applications of large point clouds, the traditional octree with an inflexible structure must be avoided and search algorithms must fully exploit the regularity of data distribution in octree. The key to a high efficiency is that any methods of improvements should focus on how to reduce the number of data input/output on hard drives and execute operations in external storage as fast as possible.

Ansprechpartner

Volker Walter

Dr.-Ing.Gruppenleiter Geoinformatik