Meijie Xiang

Automatic Vehicle and Occlusion Removal in Aerial and Satellite Imagery Using Deep Learning Algorithms

Duration: 6 months

Completition: January 2022

Supervisor: Dr. Reza Bahmanyar (DLR), Seyedmajid Azimi (DLR), Prof. Dr.-Ing. Uwe Sörgel

Examiner: Prof. Dr.-Ing. Uwe Sörgel

Introduction

Occlusion-free drivable areas in aerial and satellite imagery can be required in various applications, such as city modeling, mapping, and autonomous driving. However, these areas, including roads and parking places, are typically highly occluded by vehicles, buildings, bridges, pedestrians, trees, and some other roads. Although the latest deep learning algorithms have made significant contributions to image inpainting for object removal, there is no appropriate resolution to specific tasks such as automatic removal of occlusions in images that were taken aerially. This work attempts to investigate a practical learning-based approach, which can eliminate occlusions and restore interesting features of target areas. In addition to the pure road surfaces and parking places, other characteristics such as lane-markings and curbstones are also viewed as reconstructed features since they are used by vehicles in general transportation infrastructure.

Methods

The most significant challenge in occlusion removal is the lack of information after removing extracted occlusions from the original image. Classical approaches fill the missing regions using a mathematical model, and the results can be automatically generated with an input image and its corresponding mask. In contrast, all deep learning approaches require ground truth in order to train the networks adequately. This leads to another problem: There is no corresponding occlusion-free ground truth for the raw dataset.

In this work, 2 different kinds of algorithms are implemented to accomplish our goal. Due to the lack of ground truth, 2 newly proposed datasets are derived from the DLR-SkyScapes [1] dataset. One refers to occlusion-free images on drivable areas together with random binary occlusion-shaped masks; the other coincides with original/synthesized image pairs and the RGB occlusion masks.

1. Image inpainting algorithms

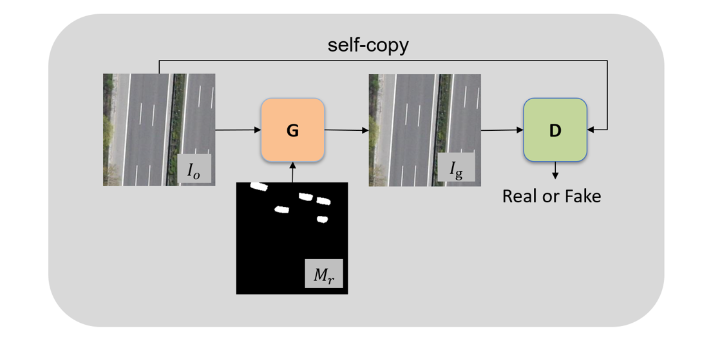

As the general solutions to image restoration, inpainting algorithms are utilized in the first stage. In the training process, as shown in Figure 1, primarily the occlusion-free training image Io and random artificial mask Mr should be given to the network, then the generator G receives an element-wise multiplication of the original image and the reversed mask, where the pixel values on masked regions are missing. Afterwards, the restored result provided by the generator Ig is passed to the discriminator D for distinction from the original input image. The network can be better trained by repeating this procedure, and then the prediction obtained in the test part is also promoted.

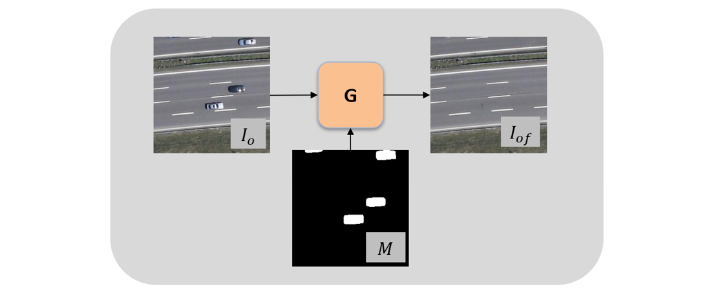

The test process (Figure 2) makes it possible to input the occluded image Io and its matched mask M to the trained network. Ideally, we are able to acquire reconstructed occlusion-free image Iof with both structure preservation and texture consistency.

2. Adapted shadow removal algorithms

Eliminating occlusions can be resolved using image inpainting neural networks, while no attempt has been made to overcome the limitations of the small-scale and unbalanced occlusion-free dataset. In addition, inpainting methods solely reconstruct the masked images with guidance of binary masks and are not aware of occlusions. Nevertheless, some previous works on shadow removal learn properties of shadows by first creating artificial shadows and later getting them removed. Therefore, inspired by them, we find it worthwhile to adapt these algorithms to our synthesized dataset with RGB fake occlusions.

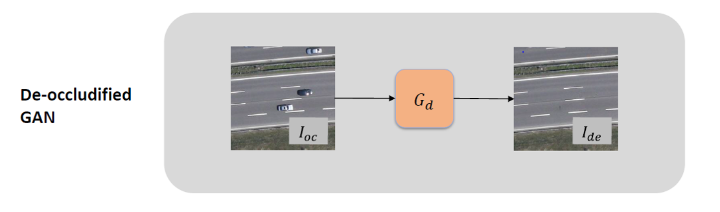

The workflow of the training process forms a cycle architecture. In Figure 3, two GAN-based networks are proposed, namely the Occludified GAN and the De-occludified GAN. Go and Do denote the generator and discriminator of the Occludified GAN, whereas Gd and Dd stand for the generator and discriminator of the De-occludified GAN, respectively. The input image patch Io and the matched random mask Mr are imported to Occludified GAN as a first step. Next, the generated occluded output Ioc is put forward to Do to get contrasted with the synthesized ground truth Igt, which is the pure element-wise replacement of the masked area from Mr to Io. In respect of the De-occludified GAN, the input image is identical to the output of the Occludified GAN Ioc. Then, with the same mask Mr in the Occludified GAN, Gd can give out the de-occluded image Ide, whose ground truth is consistent with the original patch Io and would be compared by Dd.

Concerning the test process for real-life occlusion removal, only the generator Gd from De-occludified network is needed to generate the de-occluded image Ide. As the network predicts the mask itself, the input is solely the original occluded image Ioc.

Results

Experiments have proved that only the occlusion-free dataset can be used in practical applications. The results of the finest approaches from inpainting and shadow removal algorithms, StructureFlow [2] and Ghost-free [3], are compared in Figure 5. Ghost-free [3] outperforms StructureFlow [2] in a visually plausible manner.

Subsequent to the test procedure, all results can be stitched back as an entire image. The size of a stitched image is slightly smaller than the original size, as the size of the stitched output can only form multiples of the cropping size 256. Figure 6 reveals the stitching details of these 2 methods. The effects of both algorithms are affected by shadows, and Ghost-free [3] gives a relatively more suitable result.

Conclusion

This thesis explores the possibility of automatically removing vehicles and occlusions from aerial images with a potential adaption to satellite images. Experiments show that the optimally adapted shadow removal network Ghost-free [3] can export the most desired outputs without mask guidance.

Although this work has successfully demonstrated that the right direction has been chosen for the issue of automatic occlusion removal, it has certain limitations in terms of the network capacity and unbalanced datasets. In consequence, future works should continue with the generation of a more extensive and balanced occlusion-free dataset to pursue a better generic network property. Meanwhile, more constraints on structural and architectural aspects shall be also taken into account regarding the optimal algorithms.

References

[1] S. Azimi, C. Henry, L. Sommer, A. Schaumann, and E. Vig, " Skyscapes -- Fine-Grained Semantic Understanding of Aerial Scenes," in International Conference on Computer Vision (ICCV), October 2019.

[2] Y. Ren, X. Yu, R. Zhang, T. H. Li, S. Liu and G. Li. ‘StructureFlow: Image Inpainting via Structure-aware Appearance Flow’. In: IEEE International Conference on Computer Vision (ICCV). 2019.

[3] X. Cun, C.-M. Pun and C. Shi. Towards Ghost-free Shadow Removal via Dual Hierarchical Aggregation Network and Shadow Matting GAN. 2019. arXiv: 1911.08718 [cs.CV].

Ansprechpartner

Uwe Sörgel

Prof. Dr.-Ing.Institutsleiter, Fachstudienberater