Decai Chen

Fast Automatic 3D Reconstruction for Household Objects using Consumer RGB-D Cameras

Duration of the Thesis: 6 months

Completion: März

Supervisor: M. Sc. Jochen Lindermayr (Fraunhofer IPA)

Examiner: Prof. Dr.-Ing. Norbert Haala

Over the last decades, 3D reconstruction of object models has been a popular research topic in the field of Computer Vision. This is useful for many applications including robotics, 3D printing, games and movies, augmented/virtual reality, online shopping as well as preservation of cultural heritage.

In this thesis, we developed an automatic RGB-D-based 3D reconstruction system for household objects, in which an omni-directional 3D textured model of an unknown object can be generated in up to one and half minutes.

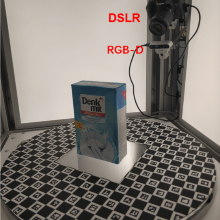

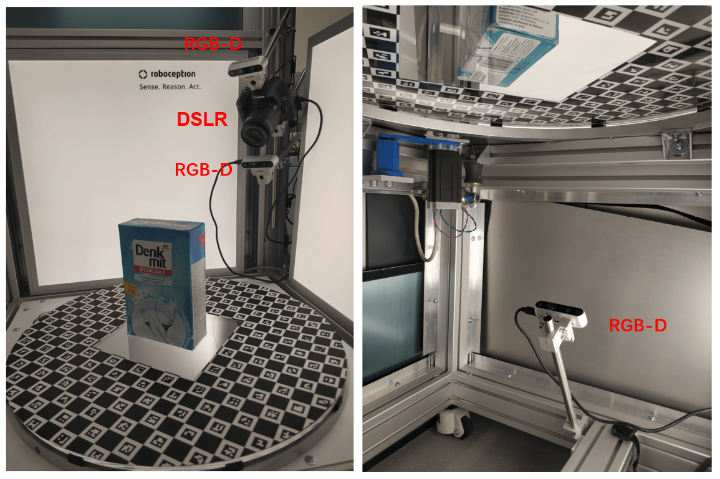

We first calibrate all sensors involving in this framework including RGB-D cameras (Intel RealSense D435) and additional DSLR camera. Before data collection, the object of interest is put on the turntable by an operator, which is the only manual step for the whole framework, because we want to achieve maximal level of automation. After starting the pipeline for reconstruction, both RGB and depth data from RGB-D cameras and optionally high-resolution RGB data from DSLR camera are collected while the object is rotated with a glass turntable for one circle.

During the rotation, two flipping ChArUco marker boards with center holes where the object lies are used to define a global coordinate system. Perspective-n-Point method is applied to estimate the transformation between RGB camera coordinate system and marker board coordinate system, given intrinsic parameters of all cameras. Bottom marker board frame is transformed to the top one which is referred to as the global frame. Then we can calculate the pose for depth images with known relationship between infrared sensor and color sensor.

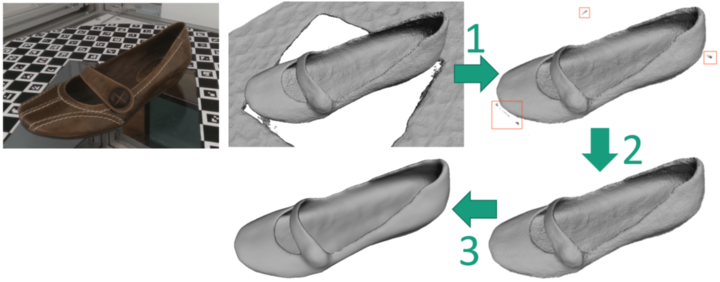

After that, all aligned depth frames are integrated incrementally into a global TSDF volume using GPU implementation. To define the value of TSDF function, we improve the original implementation of KinectFusion by using slope distance weighted by incident angle, rather than depth distance and constant weight. Then Marching Cubes algorithm is used to extract the mesh surface from the TSDF volume.

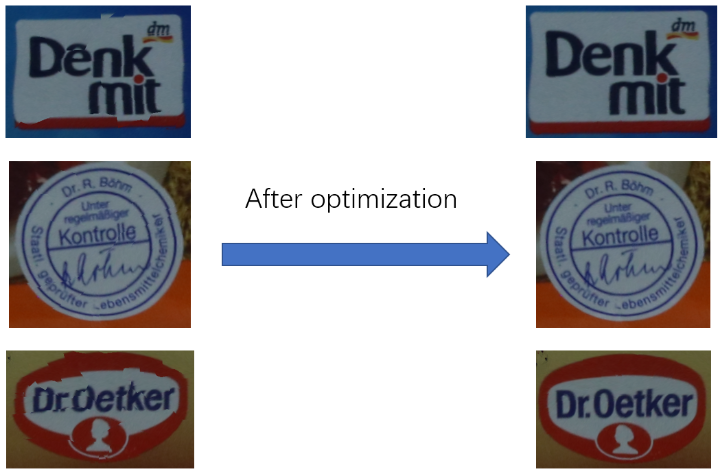

As for texture mapping, color images from RGB-D cameras can be used. Alternatively, an additional DSLR camera is more advantageous due to its high-resolution property. Before texture mapping, poses of all involving color images are optimized to ensure the photometric consistency, resulting in more coherent appearance. Instead of associating all visible vertices to a color image which is used by Zhou et al. in color map optimization, we propose to exclude vertices that are not frontal using again incident angle, leading to significantly improved result.

Finally, we present reconstructed 3D textured surface models of household objects. With a compelling visual quality and metric accuracy, the reconstructed 3D models are suitable for visual inspection, measuring tasks and online shopping visualizations, 3D-simulations, as well as robot vision.

Ansprechpartner

Norbert Haala

apl. Prof. Dr.-Ing.Stellvertretender Institutsleiter